search engine

Google Explains How it Organizes Information in Search

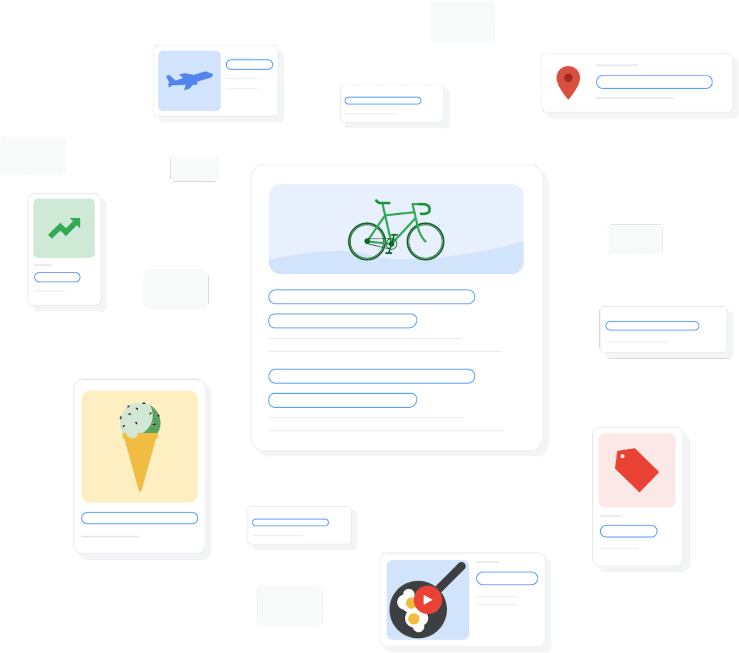

Google’s search algorithms are complex and constantly evolving to provide users with the most relevant and helpful search results. While I don’t have the exact details of the latest algorithm changes or updates, I can provide a general overview of how Google organizes information in search results:

1. Crawling and Indexing: Google’s search bots, often referred to as “Googlebot,” crawl the web to discover and index web pages. During the crawling process, Googlebot follows links from one page to another, indexing the content it finds.

2. Ranking Algorithms: Google uses a variety of algorithms to rank web pages in search results. The most well-known algorithm is PageRank, which evaluates the quantity and quality of links pointing to a page. However, Google employs hundreds of ranking factors to determine the relevance and authority of a page.

3. Relevance and Quality: Google’s main goal is to provide users with the most relevant and high-quality content in response to their search queries. The algorithms assess factors like keywords, user intent, content quality, relevance, user experience, and more.

4. User Intent: Google aims to understand the intent behind a user’s search query. It evaluates whether the user is seeking information, wants to make a purchase, or needs a specific service. This helps Google display results that match the user’s intent.

5. Featured Snippets: Google often provides featured snippets, which are concise summaries of the information from a web page that directly answer a user’s query. These are displayed at the top of search results and aim to provide quick and accurate answers.

6. Local Search: For location-based queries, Google’s algorithms consider the user’s location to provide results relevant to their geographic area. This is particularly important for local businesses and services.

7. Mobile-Friendly and Core Web Vitals: Google prioritizes mobile-friendly websites and pages that provide a good user experience, as well as those that meet Core Web Vitals metrics related to loading, interactivity, and visual stability.

8. Structured Data: Websites that use structured data markup (such as Schema.org) can provide additional context to Google about their content, helping search engines understand the content’s purpose and structure.

9. Freshness: Google considers the freshness of content, particularly for topics that require up-to-date information, such as news or recent events.

It’s important to note that Google’s algorithms are proprietary and not fully disclosed. The search landscape is continually evolving, and Google regularly updates its algorithms to improve the quality of search results and combat spammy practices. For the most accurate and up-to-date information about how Google organizes information in search, I recommend referring to Google’s official documentation and announcements.

What Does a Zebra Sound Like? Ask Google

The animal kingdom’s symphony is as diverse as the creatures themselves, each species contributing a unique sound to the cacophony of nature. Zebras, those iconic striped equids, hold a certain enigmatic allure. Have you ever wondered, “What does a zebra sound like?” This essay delves into the intriguing world of zebra vocalizations and the role that Google plays in providing answers that connect us with the wildlife symphony.

Zebras: Striped Wonders of the Savanna

1. Zebra Diversity: Zebras, though visually similar, are represented by three species: the plains zebra, Grevy’s zebra, and the mountain zebra.

2. Social Nature: Zebras are known for their social behavior, often congregating in herds that provide safety in numbers.

The Melodic Language of Zebras

1. Vocal Repertoire: Zebras communicate through various vocalizations, including braying, barking, snorting, and a distinctive high-pitched call known as “whickering.”

2. Intra-Herd Communication: Zebras employ these sounds to convey emotions, alert others to danger, establish territory, and maintain cohesion within the herd.

The Curious Question: “What Does a Zebra Sound Like?”

1. Google’s Role as a Gateway: Google’s search engine serves as a gateway to information, answering queries about the enigmatic vocalizations of zebras.

2. Accessing Wildlife Sounds: Google allows users to explore audio recordings of zebra vocalizations, capturing the essence of these animals’ communication.

Unlocking the Auditory Archive: Online Resources

1. Wildlife Sound Databases: Online platforms like the Macaulay Library at the Cornell Lab of Ornithology house a treasure trove of wildlife sounds, including those of zebras.

2. Educational Portals: Websites and educational institutions curate and share zebra vocalizations to enhance our understanding of these remarkable creatures.

The Evolution of Zebra Sound Research

1. Scientific Curiosity: Researchers have delved into the world of zebra vocalizations, studying their context, variations, and meanings.

2. Technology’s Impact: Advancements in audio recording technology and analytical tools have expanded our ability to capture and study zebra sounds.

Interpreting Zebra Sounds: Communication and Beyond

1. Communication Dynamics: Zebra vocalizations unveil a world of communication intricacies within the herd, reflecting social bonds and behavioral cues.

2. Environmental Clues: Beyond communication, zebra sounds offer insights into their environment, helping us understand the auditory landscape of their habitats.

Cultivating Nature Appreciation and Conservation

1. Empathy Through Sound: Hearing zebra sounds fosters empathy and connection with these creatures, highlighting the importance of wildlife conservation.

2. Inspiring Future Naturalists: The accessibility of zebra vocalizations through Google and online resources encourages curiosity among future generations.

As we ponder the question, “What does a zebra sound like?” We are reminded of the intricate connections that bind us to the natural world. Google’s role in providing access to zebra vocalizations amplifies our understanding of these creatures’ communication and the symphony of the savanna. By opening the auditory gateway to nature’s melodies, we can embrace the beauty, complexity, and fragility of the animal kingdom, inspiring us to cherish and conserve the wildlife that shares our planet.

How to use Schema to create a Google Action

Creating a Google Action using Schema requires integrating structured data markup on your website or content to enable Google to understand and process your data effectively. Google Actions are voice-activated apps for Google Assistant that provide users with valuable information or perform specific tasks. Schema markup helps define the content and context of your data, making it easier for Google to comprehend and present to users through voice interactions.

Here’s a step-by-step guide on how to use Schema to create a Google Action:

1. Choose the Right Schema Markup:

Select a relevant Schema markup type that aligns with the content or service you want to provide through your Google Action. Common Schema markup types include “FAQPage,” “HowTo,” “Recipe,” “Event,” and more. Choose the one that best suits your use case.

2. Implement Schema Markup:

Add the Schema markup to the HTML of the web page that corresponds to the content you want to make available through your Google Action. This involves adding the appropriate Schema properties, such as name, description, URL, and other relevant details. You can manually add the markup using JSON-LD, microdata, or RDFa formats.

3. Validate Schema Markup:

Use Google’s Structured Data Testing Tool or Rich Results Test to validate your Schema markup. This step ensures that your markup is correctly implemented and will be interpreted accurately by Google.

4. Register Your Action on Google:

To create a Google Action, you need to create a project on the Google Actions Console. This is where you’ll define the conversational interface and interactions for your Action. Go to the Google Actions Console (https://console.actions.google.com/) and create a new project.

5. Define Intents and Utterances:

Within your Google Action project, define the intents (user requests) and associated sample utterances that users will say to invoke your Action. For each intent, map it to the appropriate Schema markup on your website.

6. Setup Dialog Flow (Optional):

You can use Dialog Flow, Google’s natural language processing platform, to build the conversational flow of your Google Action. Link your Dialog Flow project to your Google Action project to create a seamless interaction experience.

7. Test Your Google Action:

Test your Google Action using the simulator provided in the Google Actions Console. Ensure that the intents are correctly triggering and that the responses align with the structured data you’ve marked up.

8. Submit for Review:

Once you’re satisfied with the functionality and testing of your Google Action, submit it for review by Google. This process ensures that your Action meets Google’s quality and content guidelines.

9. Deploy Your Google Action:

After Google approves your Action, it will be available to users on Google Assistant-enabled devices. Users can invoke your Action by saying “Hey Google” or “Okay Google,” followed by the name of your Action and the intent you’ve defined.

Using Schema markup to create a Google Action enhances the relevance and accuracy of the information your Action provides to users. It also ensures a smooth and intuitive user experience. Remember that creating a Google Action involves both technical and conversational design aspects, so a well-rounded understanding of both is essential for a successful implementation.

10 SEO Hacks to Skyrocket Your Website’s Traffic: A Guide for Website Owners

- Make sure that your website is SEO optimized. SEO optimization is the process of making sure your website is designed in a way that makes it easier for search engines to crawl and index your website. This involves optimizing your page titles, meta descriptions, and keyword density. Additionally, you should make sure that your website is optimized for mobile devices, as this is becoming increasingly important for SEO.

- Create content that is of high quality. Content is one of the most important factors for SEO, and if you want to increase your website’s traffic, you should make sure that your content is well-written, informative, and interesting. Additionally, you should create content that is relevant to the topics that your target audience is interested in.

- Make sure to use the right keywords. Keywords are the words and phrases that people use to search for information online. By targeting the right keywords, you can increase the chances of your website appearing in the search engine results pages, which can help to drive more traffic to your website.

- Utilize social media. Social media can be a great way to promote your website and increase its visibility. By sharing your content across different social media platforms, you can increase the chances of people discovering your website and visiting it.

- Use backlinks. Backlinks are links that are placed on other websites that lead back to your own website. They are a great way to increase your website’s visibility and can help to drive more traffic to your website.

- Make sure your website is secure. Security is an important factor when it comes to SEO, and if your website is not secure, you may find that it is penalized by search engines. Make sure that your website is secure by using an SSL certificate, and other security measures.

- Take advantage of local SEO. Local SEO is a great way to increase your website’s visibility in local search results. This can help to drive more local traffic to your website and can help you to reach your target audience more effectively.

- Make use of content marketing. Content marketing is a great way to promote your website and increase its visibility. By creating content that is interesting and informative, you can increase the chances of people discovering your website and visiting it.

- Analyze your website’s performance. You should regularly analyze your website’s performance in order to identify any areas that need improvement. This can help you to make the necessary changes to improve your website’s visibility and increase its traffic.

- Utilize Google’s tools. Google offers a variety of tools that can be used to improve your website’s SEO. For example, Google Search Console can be used to identify any errors or issues with your website, and Google Analytics can be used to track your website’s performance and identify areas that need improvement.

Should robots.txt support a feature for no indexation

Robots.txt is a text document used by webmasters to give instructions to web crawlers and robots about how to crawl and index a website. It is a fundamental element of website search engine optimization (SEO) and is used to control the indexation of a website.

The robots.txt file is used to inform search engine spiders which pages and files should not be indexed by search engines. This is important to keep certain pages from being indexed in search engine results, such as pages with sensitive information, or pages that are still under construction.

Usually, the robots.txt file is placed in the root directory of a website and is used to prevent search engine bots from crawling and indexing certain pages. It is also used to control the crawl rate of a website, which is the speed at which search engine bots will crawl the website.

One of the more popular features of robots.txt is the “noindex” directive. This directive tells search engine bots not to index a specific page or set of pages. This is especially useful for webmasters who want to keep certain pages from appearing in search engine results, such as pages with sensitive information or pages that are still under construction.

Despite the usefulness of the “noindex” directive, many webmasters are still unsure if they should be using it in their robots.txt file. A survey conducted by Search Engine Land revealed that only 41% of webmasters are using the “noindex” directive in their robots.txt files.

The survey also showed that the majority of webmasters aren’t aware of the potential benefits of using the “noindex” directive. Most webmasters believe that the robots.txt file should only be used to block search engine bots from crawling and indexing certain pages of their website.

In conclusion, the “noindex” directive should be used in the robots.txt file for those webmasters who want to prevent certain pages from appearing in search engine results. Despite its usefulness, the majority of webmasters are still unaware of the potential benefits of using the “noindex” directive in their robots.txt files. As such, it is important for webmasters to take the time to understand the importance of the “noindex” directive and to include it in their robots.txt files.

Yahoo! withdraws from China, becoming the second major American tech company in a month to leave the country

Yahoo! recently announced its withdrawal from China, making it the second major American tech company to leave the country in a month. This decision has sparked debate among political and business leaders around the world. Yahoo! had been active in China since 1999, when it opened its first office in Beijing. The company was initially successful in building up its presence in the country, including launching the Yahoo! China website, establishing partnerships with local companies, and introducing new services. However, Yahoo! gradually began to struggle in the Chinese market. Its website was blocked by the Chinese government and its services were subjected to heavy censorship.

Additionally, Yahoo! faced increasing competition from other tech companies such as Baidu and Alibaba. Given these challenges, Yahoo! decided to pull out of the Chinese market. The company cited the need to focus on its core markets, such as the United States, Europe and Japan, as the primary reason for its withdrawal. The news of Yahoo!’s withdrawal has had a significant impact on the tech sector in China. Many Chinese tech companies had been relying on Yahoo!’s services and partnerships, and now must look for alternative solutions. Additionally, the news has raised questions about the future of foreign tech companies in China.

At the same time, there are some who believe that Yahoo!’s withdrawal could actually be a positive development. Some commentators argue that it could open up the Chinese market to other tech companies, potentially leading to more competition and innovation. Regardless of the ultimate outcome, it is clear that Yahoo!’s withdrawal from China marks a significant shift in the tech sector in the country.

Beginner SEO guide from beginner

How to see a beginner SEO guide in beginner point of view.

Written by a fresher who joined our company recently.

SEARCH ENGINE OPTIMIZATION (SEO)

It is a strategy of making most number of visitors to a particular website to obtain the high rank among all other websites looked through any search engine like Google, yahoo etc. This method can boost up a particular company or firm with high profits in their income. It also helps the company to become more popular among the midst of the people.

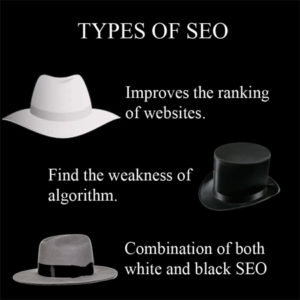

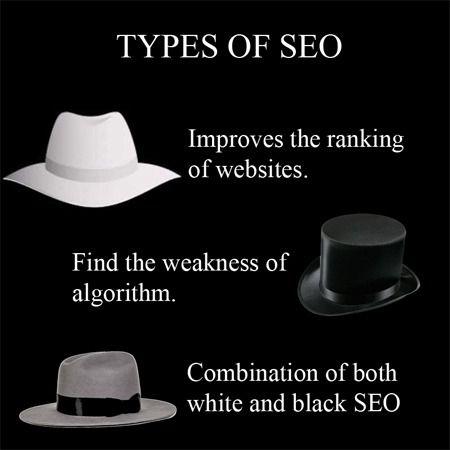

Search engine optimization can be classified into two types. They are:

- White Hat SEO

- Black Hat SEO

- Gray Hat SEO

White Hat SEO:

This is a type where the latest techniques and methods are used by the optimization to improve the ranking of their website in the search engine. In white hat SEO we can expect a stable and progressive growth in the search engine rankings.

Black Hat SEO:

This type of optimization is used to find the weakness in the algorithm of a particular website and rectifies it to obtain higher ranking in the search engines. Black hat SEO is quick in progress and unpredictable in nature.

Gray Hat SEO:

Gray Hat SEO is a combination of both white hat SEO and black hat SEO. This method is a transformation of white hat SEO to black hat SEO and the vice versa. This method is used due to the pressure from the company or to produce better results. This cannot be used all the time. It can be used only to some extent.

Two kinds of SEO:

- On-page SEO

On-page SEO is based on the content in the website. It is clear that how well you produce the website will make the company familiar among the people and improves the ranking quickly in the search engine when they search through keywords.

Elements of on-page SEO:

- Title of the page

- Description about the page

- Text headings

- Structure of URL

- Use less images

- Off-page SEO:

Among the 100% ranking of a website 75% comes through off-page SEO only. It is totally based on your authority among the internet. To get authority you should create an inbound link in your site. The inbound link should be used by other websites that links your websites. Links from authoritative websites helps you to grow faster in the search engine rankings.

SEO in building business:

Before designing a site one should know about the company and the objectives of the company very well, only then he can succeed in his work. If the person is not sure about the company and still designs it very well they are losing the critical element of the successful business. To be successful you should follow the SEO strategies by choosing the right keywords and writing the good content about the company.

SEO tools:

Keyword analysis tool:

Keyword is an important in the SEO. Keywords should be analyzed and used by the SEO. Keywords are the thing which shows results as soon as a person searches in the search engines.

Ranking tool:

It is important to know about your rankings in the search engines. It shows which of your marketing strategy is working well and helps you to focus more on the particular strategy.

Effective content tool:

Content of the website is very much important to attract the people and for the growth of the website in search engine rankings. The content should be updated at least once in two days for the better results.

What should expect in the next months in terms of SEO for Google?

Today’s webmaster video is answering the question, what should we expect in the next few months in terms of SEO for Google?

Mattcutt replies,” we’re taping this video in early May of 2013.So I’ll give you a little bit of an idea about what to expect as far as what Google is working on in terms of the web spam team. In terms of what you should be working on, we try to make sure that that is pretty constant and uniform. Try to make sure you make a great site that users love, that they’ll want to tell their friends about, book mark, come back to, visit over and over again, all the things that make a site compelling.

We try to make sure that if that’s your goal, we’re aligned with that goal. And therefore, as long as you’re working hard for users, we’re working hard to try to show your high quality content to users as well. But at the same time, people are always curious about, OK what should we expect coming down the pipe in terms of what kinds of things Google is working on? One of the reasons that we don’t usually talk that much about the kinds of the things we’re working on is that the plans can change. The timing can change.When we launch things can change. So take this with a grain of salt. This is, as of today, the things that look like they’ve gotten some approval or that look pretty promising. OK, with all those kinds of disclaimers, let’s talk a little bit about the sort of stuff that we’re working on. We’re relatively close to deploying the next generation of Penguin. Internally we call it Penguin 2.0.And again, Penguin is a web spam change that’s dedicated to try to find black hat web spam, and try to target and address that. So this one is a little more comprehensive than Penguin, than Penguin 1.0.And we expect it to go a little bit deeper and have little bit more of an impact than the original version of Penguin, We’ve also been looking at advertorials, that is sort of native advertising, and those sorts of things that violate our quality guidelines.

So again, if someone pays for coverage or pays for an ad or Something like that, those that should not flow page rank. We’ve seen a few sites in the US and around the world that take money and then do link to websites and pass page rank. So we’ll be looking at some efforts to be a little bit stronger on our enforcements as far as advertorials that violate our quality guidelines. Now there’s nothing wrong inherently with advertorials or native advertising, but they should not flow page rank. And there should be clear and conspicuous disclosure, so that users realize that something is paid and not organic or editorial. It’s kind of interesting. We get a lot of great feedback from outside of Google. So for example, there were some people complaining about searches like Payday Loans on Google.co.uk.So we have two different changes that try to tackle those kinds of queries in a couple different ways. We can’t get into too much detail about exactly how they work. But I’m kind of excited that we’re going from having just general queries be a little more cleaned to going to some of these areas that have traditionally been a little more spammy, including for example, some more pornographic queries. And some of these changes might have a little bit more of an impact in those kinds of areas that are a little more contested by various spammers and that sort of thing. We’re also looking at some ways to go upstream to deny the value to link spammers.

Some people who spam links in various ways, we’ve got some nice ideas on trying to make sure that becomes less effective. And so we expect that will roll out over the next few months as well. And in fact we’re working on a completely different system that does more sophisticated link analysis. We’re still in the early days for that, but it’s pretty exciting. We’ve got some data now that we’re ready to start mugging and see how good it looks. And so we’ll see how that bears fruit or not. We also continue to work on hacked sites in a couple different ways. Number one, trying to detect them better. We hope in the next few months to roll out a next generation of hack site detection that is even more comprehensive. And also trying to communicate better to webmasters, because sometimes we see confusion between hack sites and sites that serve up malware. And ideally you would have a one stop shop where once someone realizes that they’ve been hacked, they can go to Webmaster Tools and have some single spot where they could go and get a lot more info to sort of point them in the right way to hopefully clean up those hacked sites. So if you’re doing high quality content whenever you’re doing SEO, this shouldn’t be a big surprise. You shouldn’t have to worry about a lot of different changes. If you’ve been hanging out on a lot of black hat forums and trading different types of spamming package tips and that sort of stuff, then it might be a more eventful summer for you. But we have also been working on a lot of ways to help regular webmasters. So we’re doing a better job of detecting when someone is sort of an authority in a specific space. It could be medical. It could be travel, whatever, and trying to make sure that those rank a little more highly if you’re some sort of authority or a site that, according to the algorithms, we think might be a little bit more appropriate for users.

We’ve also been looking at Panda and seeing if we can find some additional signals–and we think we’ve got some– to help refine things for the sites that are kind of in the border zone, in the grey area a little bit. And so if we can soften the effect a little bit for those sites that we believe have got some additional signals of quality, then that will help sites that might have previously been affected to some degree by Panda. We’ve also heard a lot of feedback from people about, OK, if I go down three pages deep, I’ll see a cluster of several results all from one domain. And we’ve actually made things better in terms of you would-be less likely to see that on the first page, but more likely to see that on the following pages. And we’re looking at a change, which might deploy, which would basically say, once you’ve seen a cluster of results from one site, then you’d be less likely to see more results from that site as you go deeper into the next pages of Google search results. And that was good feedback that people have been sending us. We continue to refine our host clustering and host crowding and all those sorts of things. But we will continue to listen to feedback and see what we can do even better. And then we’re going to keep trying to figure out how we can get more information to webmasters. So I mentioned more information for sites that are hacked and ways that they might be able to do things. We’re also going to be looking for ways that we can provide more concrete details, more example URLs that webmasters can use to figure out where to go to diagnose their site. So that’s just a rough snapshot of how things look right now. Things can absolutely change and be in flux, because we might see tacks, different types of things. We need to move our resources around. But that’s a little bit about what to expect over the next few months in the summer of 2013.I think it’s going to be a lot of fun.

I’m really excited about a lot of these changes, because we do see really good improvements in terms of people who are link spamming or doing various black hat spam would be less likely to show up, I think, by the end of the summer. And at the same time, we’ve got a lot of nice changes queued up that hopefully will help small, medium businesses, and regular webmasters as well. That’s just a very quick idea about what to expect in terms of SEO for the next few months as far as Google.”

Whirling the traffic into sales

Traffic is the primary thing, whirling that traffic into sales is another. while more and more regulars boost their time spent on the Internet, marketers discover for innovative and special ways to tender their products and services to find out great successes .Online advertising opens a wide variety for advertisers to make lead over internet, easy way to get the web traffic. These days website is more than a “must have.” When you endeavor to make good impressions in person, it’s equally as vital to create a fine sense to a website visitor. Testimonials give a great way to replace visitors into customers. If you have a trade site, customer assessment will go a extensive method to encourage a potential customer to buy. A Facebook contest asking your customers what they like about you? You can generate testimonials for your website and Facebook likes at the same time. Creating a home page can acquire customers to your sites. Like Using Google adwords and banner advertising leads customers to your site. If they land the secular page of your home page says about actual advertising details can leads to a great traffic to your site.

The consumers need information quickly. If you don’t provide it they will find your competitor site. So, create a landing page specifically for your promotion and make sure it includes all the information of the promotion. Don make your visitor to click else where to find the result. Make the peculiar things to be noticeable. If your form is slow and cumbersome, or requires too much information, you will likely lose customers. You can put a lot of money into great external publicity. But if your website is boring or out-of-date, your hard work will be shattered. Make sure your site is convincing, inspiring and simple to steer. And twirl more of your visitors into gainful customers.

The worth of long tail keywords in SEO traffic

A long-tail keyword is more of a “phrase” than a keyword itself. For example, a targeted keyword is “diamond studs in Chennai,” while a long-tail keyword would be “wedding diamond studs in Egmore, Chennai.” A long-tail keyword is characteristically four words or more and contains a phrase, rather than a mass of all-purpose terms. The long tail keywords are usually take up for Seo campaigns, Companies frequently fail to notice the importance of long-tail keywords because of the difficulty to put up together the articles and the web content. Though, when we start using the long keywords we can come up with the good SEO than a site over fed with plentiful short, targeted keywords. Long tail keywords are more successful in diverting the traffic directly to your site. If the user changes or alters the long tail keywords it still has a chance of increased search engine activity than a site doesn’t use the long tail keywords. Search engine rankings are very important for any site. By using key phrases that are pertinent to general keywords used in the SEO, can increase the ranking. Before choosing a long tail keyword , consider its status and rivalry, can be done through the tools such Google external keyword tool or keyword spy etc.Create a list of general tools and do a tool search.The companies should consider the use of long tail keywords for more traffic and higher rankings.